Property & Casualty

AI Risk | A Risk Manager’s Guide to the Basics

AI Risk | A Risk Manager’s Guide to the Basics

We hear about AI everywhere—in the news, in board discussions, on social media and in personal conversations. New AI products and tools continually evolve, and discussions of their sustainability, safety and scope are trending. Less attention is dedicated to real-life corporate utilizations, the risks that AI generates and the tools available to minimize risk from a business standpoint.

This series of white papers is designed to provide a high-level understanding of what a risk manager can do to assess the risk, what protections can be implemented and what insurance coverage is available under current insurance policies.

Artificial Intelligence has been building on various machine learning techniques since the 1950s1. Since 2010, deep learning techniques have progressed, allowing AI to become self-learning and eventually bringing generative AI and large language models, such as ChatGPT, to the forefront. These developments have excited the imagination of companies and individuals everywhere, resulting in significant investments in the field.

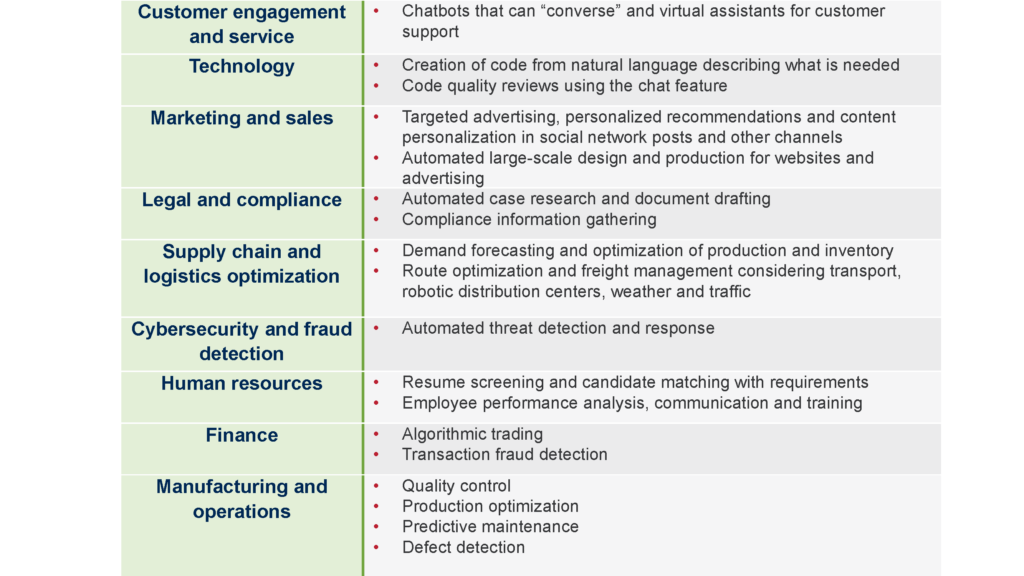

A Day in the Life of Commercial AI

Exposures Created by AI

Currently, existing AI litigation examples mainly center around content creators suing AI companies for using their content to train AI models without permission. However, the rapid increase in AI use indicates that litigation and direct impact risks will continue to evolve. Recognized risks include the following:

- AI hallucinations: AI models, particularly large language models (LLMs), may make things up. In response to a query about crossing the English Channel on foot, an LLM fabricated a world record holder and specific details about the crossing. Legal research done by an LLM has created and cited nonexistent cases.

- Privacy infringement: Private information shared with AI models can result in a data breach. AI notetakers on video calls can breach confidentiality agreements and obligations. “Shadow AI,” or the use of LLMs to analyze information without corporate approval, is high, over 70%, according to some surveys³.

- IP loss or infringement: Content created by AI can infringe on pictures, code, music, trademarks and personal identifiers used to train algorithms. Courts are deciding who is responsible and the extent of damages.

- Model bias: Models can result in systematic errors that create unfair or prejudiced outcomes for certain groups. Employment resume review models have favored the type of workforce companies have employed out of compliance with requirements to limit prejudice and discrimination. Facial recognition systems are less accurate for people of color in some cases.

- Reputation loss: Inaccurate or discriminatory AI-generated content becoming public could cause challenges. In one example, an airline’s chatbot “hallucinated” rules suggesting a passenger could apply for bereavement fares retroactively after a grandmother’s death. Lawsuits ensued with widespread press coverage requiring a public relations response.

- Business revenue loss: As companies rely more on AI and staffing is adjusted to take advantage of its efficiencies, technology failures could result in business functions stopping for longer periods while technology issues are resolved without human backup. The resulting revenue losses will depend upon the use of the AI platform, but they could be significant.

- Regulatory fines: Much of the proposed legislation does not discriminate between the new deep learning models and older established AI uses. It is possible that when the proposed legislation comes into effect, some of the current uses may become illegal.

At Brown & Brown, we believe that AI-related risks evolve rapidly, and impacts will be individual depending upon the implementing entity, applications created, controls developed and technology being used. AI will potentially impact insurable and non-insurable risks across many lines of coverage. The Brown & Brown team has been tracking developments and can provide insight into the developing dangers. Our cyber risk models can encompass AI risk scenarios to evaluate individual exposures. Please contact your Brown & Brown representative to further understand our capabilities.

1. https://www.dataversity.net/a-brief-history-of-generative-ai/; https://toloka.ai/blog/history-ofgenerative-ai/; https://omq.ai/blog/history-of-ai/

2. Ourworldindata.org https://ourworldindata.org/grapher/test-scores-ai-capabilities-relativehuman-performance and Kiela/Contextual.ai https://contextual.ai/news/plotting-progress-in-ai/

3. AI at Work Is Here. Now Comes the Hard Part (microsoft.com); https://www.nextdlp.com/resources/blog/shadow-saas-genai-survey-results-2024;